AI at banks and insurers: automatically fair?

(BaFinJournal) The automation of the financial industry is advancing at a rapid pace. Artificial intelligence and machine learning promise great economic opportunities. But their use also raises some critical questions.

By Lydia Albers, Dr Matthias Fahrenwaldt, Ulrike Kuhn-Stojic, Dr Martina Schneider, BaFin Banking Supervision and Insurance Supervision

In the financial industry, as in many other sectors, the use of artificial intelligence (AI) and machine learning (ML) is on the rise. These technologies can be used to accelerate processes and analyse large quantities of data quickly and effectively. But when machines make decisions, problems can arise. This is because their logic only appears neutral at first glance. Highly automated decision-making processes with little human monitoring can amplify existing discrimination risks.

Financial services providers (see info box) and state supervisory authorities must therefore take measures to prevent unjustified discrimination. But what exactly is discrimination? In the financial industry, as in many other sectors, the use of artificial intelligence (AI) and machine learning (ML) is on the rise. These technologies can be used to accelerate processes and analyse large quantities of data quickly and effectively. But when machines make decisions, problems can arise. This is because their logic only appears neutral at first glance. Highly automated decision-making processes with little human monitoring can amplify existing discrimination risks.

Financial services providers (see info box) and state supervisory authorities must therefore take measures to prevent unjustified discrimination. But what exactly is discrimination?

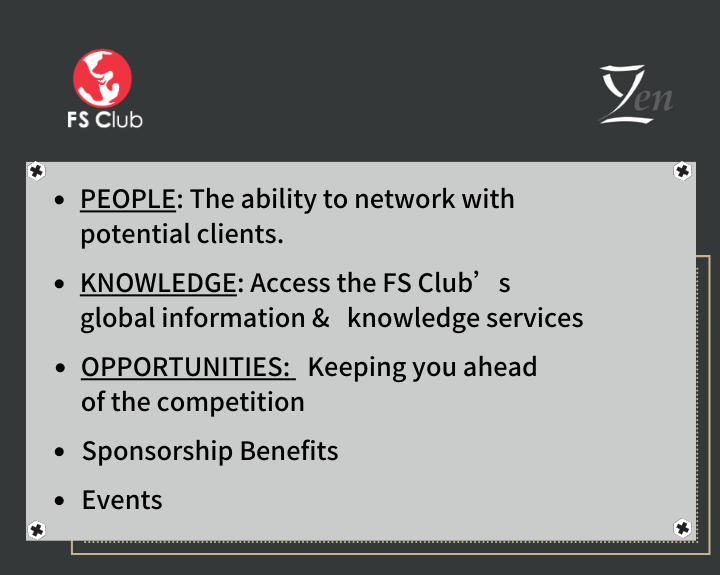

At a glance:Financial services provider

For the sake of simplicity, this article uses the term “financial services provider”. This term refers to insurance companies within the meaning of section 7 of the German Insurance Supervision Act (Versicherungsaufsichtsgesetz – VAG), credit institutions within the meaning of section 1 (1) of the German Banking Act (Kreditwesengesetz – KWG), the companies specified in section 1 (1a) of the KWG and investment services enterprises within the meaning of section 10 of the German Securities Trading Act (Wertpapierhandelsgesetz – WpHG).

EU law distinguishes between direct and indirect discrimination. In the case of direct discrimination, a person is treated less favourably on the basis of protected grounds (see info box “protected grounds”). Indirect discrimination occurs when an apparently neutral rule disadvantages a person or a group sharing the same characteristics (see info box “Various forms of discrimination”). Indirect discrimination does not relate to discriminatory treatment itself, but the impacts of seemingly neutral provisions, criteria and practices. In some cases it can be difficult to determine whether a situation is to be regarded as direct or indirect discrimination.

At a glance:Protected grounds

There is a diverse range of protected grounds or characteristics in natural persons. They depend on the culture, legal context and the specific situation. For example, European law prohibits direct and indirect discrimination on the grounds of race, ethnic background, nationality, gender, age, marital or family status, belief, religion, sexual orientation or disability.

Protected grounds vary according to the specific legal scope. Under German civil law, the General Act on Equal Treatment (Allgemeines Gleichbehandlungsgesetz – AGG), for example, specifies the following protected characteristics for retail business: race, ethnic origin, gender, religion, disability, age or sexual orientation (see sections 19 and 20 of the AGG). This differs from the personal characteristics that are prohibited for the purpose of data processing in accordance with the General Data Protection Regulation (Article 9(1) of the GDPR) since it does not include features such as trade union membership, genetic data and biometric data. Consistent regulation at the international level is made difficult due to the differences in anti-discrimination legislation around the world.

Fairness is a multifaceted challenge

In the context of AI and ML, questions of discrimination are often discussed under the umbrella term of “fairness”. This term encompasses three important aspects.

Firstly, algorithmic fairness: here the design of algorithms should ensure that persons and groups of persons are treated uniformly. This is often achieved by means of quantitative approaches. In its simplest form, this may involve comparing the share of positive loan decisions for women with the share of positive decisions for men. However, such a statistical approach based on characteristics of groups of people is not suitable for identifying individual discrimination. For this reason, further-reaching measures may be required depending on the individual situation.

Secondly, the term fairness relates to discrimination in the legal sense. This varies according to national jurisdiction. In Germany, for example, the General Equal Treatment Act sets out the definition of (legally) impermissible unequal treatment (sections 19 and 20 of the AGG). Protected grounds are also defined in this Act (see info box “Protected grounds”).

At a glanceDifferent forms of discrimination

If an older person was treated less favourably when receiving financial services on the grounds of their age, this would be an example of direct discrimination. An example of indirect discrimination might be a practice that makes such decisions on the basis of income. Since women earn less than men on average, they would be systematically disadvantaged by such a system.

Not all forms of unequal treatment are prohibited by law. If differentiation on the basis of age or income is objectively justified, this would be a permissible form of unequal treatment.

According to many experts, considering discrimination on the basis of individual protected grounds often fails to adequately account for the actual forms of discrimination experienced in daily life. This is because discrimination often results when various grounds for discrimination are considered simultaneously. Where several grounds operate and interact with each other at the same time in such a way that they are inseparable and produce specific types of discrimination, this is referred to as multiple or intersectional discrimination, “Handbook on European non-discrimination law (2018 edition)”. For example, if a female applicant was rejected for a job because the employer assumed that, at her age, she would likely soon become pregnant and take long-term parental leave, this would constitute discrimination on the grounds of the protected characteristics gender and age.

Finally, the term fairness also relates to “bias”. In the 2021 publication “Big data and artificial intelligence: Principles for the use of algorithms in decision-making processes”, BaFin and the Deutsche Bundesbank described bias as the systematic distortion of results. The term bias has many dimensions. In the simplest cases, bias relates to the datasets used to train algorithms. In practice, datasets might exclude certain customer groups, such as single women. Such datasets would then not be representative of a company’s customer base, which could result in unintentional discrimination since the algorithm would be incapable of adequately accounting for this group of persons and would therefore make assessments without any corresponding empirical basis.

The relevant literature contains numerous approaches for ensuring fairness in AI/ML-modelling. Key indicators are often used to measure fairness in algorithmic decisions. However, each indicator stands for a specific definition of fairness, and the various indicators are not necessarily compatible. A different approach uses further algorithms to explain AI/ML algorithms. However, these algorithms also have their shortcomings: it is easy to create examples in which algorithms make highly unfair decisions while typical explainability tools fail to flag these as discrimination (see info box “Fairness metrics and explainable AI”). In these examples, explainable artificial intelligence tools fail to recognise a protected characteristic as a significant factor even though the algorithm proposes a decision based on precisely that variable.

At a glance:Fairness metrics and explainable AI

Various definitions of fairness have been proposed in ML research. One approach often used in practice applies statistical measures to assess whether groups of persons are treated the same. These measures are often referred to as fairness metrics.

There are three key variants:

1.Comparison of predicted probabilities for different groups of persons

2.Comparison of predicted and actual outcomes

3.Comparison of predicted probabilities and actual outcomes

The simplest example for variant 1) is group fairness. A statistical classification procedure would meet this definition if the probability of a positive result were the same among persons with protected and non-protected characteristics. The literature discusses numerous fairness metrics which often have different objectives and are incompatible with one another. The use of such approaches alone appears unsuitable for ensuring non-discrimination.

In some instances, ML methods can also “learn” correlations from data that have no basis in reality. This may remain hidden due to the lack of transparency of such methods (often referred to as the “black box effect”).

Increasing importance is being attached to the explainability and plausibility of a model’s overall behaviour, as opposed to the comprehensibility of model calculations. The term “explainability” is multifaceted, because those who create, validate, supervise and use models all have different specialisations and diverse information needs.

Explainable artificial intelligence (XAI) techniques have been developed to allow the results of complex models to be explained. From a supervisory perspective, these techniques offer promising possibilities to mitigate the black box effect and increase transparency in AI systems. XAI techniques can be used to render the results or the inner workings of AI systems comprehensible for human users. However, they are themselves models with their own assumptions and shortcomings. Many such models are still being tested, particularly in the field of generative AI.

Generative artificial intelligence, for example large language models, can exacerbate the problem of a lack of fairness. Where such technologies are acquired from third-party providers (as is normally the case), users do not have any detailed information on the dataset used to train the models or on how they function. Such a lack of transparency is not found in conventional mathematical models, which clearly model causal relationships between various input factors and the model result, making them easier to understand.

BaFin addresses possible cases of discrimination

As part of its supervisory activities, BaFin takes account of possible cases of discrimination that may arise due to the automation of the financial industry.

In general, this issue is relevant to requirements for proper business organisation (in accordance with section 25a (1) sentence 1 of the German Banking Act and section 23 (1) of the German Insurance Supervision Act). In other words, these general governance requirements also apply to AI/ML processes. Firms must therefore adapt or expand their governance processes to take account of AI/ML. Current supervisory law already allows BaFin to address unjustified discrimination in the context of AI/ML and to demand firms comply with the relevant requirements. In its supervision of compliance with rules of conduct, BaFin expects institutions and companies to clearly set out responsibilities. This also applies to the use of AI/ML. To mitigate risks, it is also key to raise awareness and provide appropriate training for staff members entrusted with the development and use of AI/ML. Furthermore, the General Data Protection Regulation sets out the extent to which persons may be subject to decisions based on automated processing or profiling (Article 22 of the DSGVO).

The European Artificial Intelligence Act (see info box “Artificial Intelligence Act”), which entered into force on 1 August, is an important step in the legal treatment of bias risks in AI systems. In order to prevent discrimination and promote fairness and transparency, the Regulation sets out comprehensive rules for AI systems, in particular high-risk AI systems, which include models used to evaluate credit scores or creditworthiness alongside several applications in life and health insurance. This includes risk management and quality management, in addition to detailed documentation and high data quality standards. Article 10 of the AI Act also contains specific rules for minimising bias.

At a glance:The Artificial Intelligence Act

The European Artificial Intelligence Act (AI Act) entered into force on 1 August 2024. The AI Act sets out horizontal and cross-sectoral rules. It aims to promote the uptake of human-centric and trustworthy artificial intelligence. The Regulation sets out a legal framework for the development, application and use of artificial intelligence in the European Union. It focusses on the protection of health, safety, fundamental rights, democracy, the rule of law and environmental protection. At the same time, the legal framework is intended to promote innovation and improve the functioning of the internal market.

The AI act follows a risk-based approach to regulation. This means the degree of intervention increases corresponding to the level of risk posed to the relevant legal interests. The regulation sets out four risk levels:

·Prohibition of AI systems that pose unacceptable risks (for example social scoring systems).

·Requirements for AI systems posing a high risk to health, security or the fundamental rights of natural persons. Such high-risk AI systems are permitted on the European market provided they meet specific requirements. However, they must first be subject to a conformity assessment.

·Transparency and information requirements for certain AI applications that pose limited risks, such as chatbots or deepfakes. This risk category also includes generative AI capable of producing content such as texts or images.

·AI systems not requiring regulation. This includes applications such as AI-supported video games or spam filters.

The AI Act is also relevant for the financial market. AI systems used to evaluate the creditworthiness of natural persons or establish their credit score and those used for risk assessment and pricing in relation to natural persons in the case of life and health insurance are classified as high-risk AI systems. The classification of these systems as high-risk AI systems is based primarily on the considerable impact that such applications could have on the livelihoods of persons and their fundamental rights. Such risks could include limitation on access to medical care or illegal discrimination based on personal characteristics.

The European Parliament ratified the AI Act on 13 March 2024. It was approved by the Council on 21 May 2024. The Act was published in the Official Journal of the European Union on 12 July 2024 and entered into force on 1 August 2024. The various parts of the Act will apply successively over the next two years.

Financial services providers must prevent unjustified discrimination

For BaFin, one thing is clear: financial services providers must prevent unjustified discrimination against their customers caused by the use of AI/ML. Companies must establish review processes in order to identify possible sources of discrimination and take measures to mitigate them. As part of this, they must consider the limits of existing procedures for preventing unintended discrimination. Reliable and transparent governance and data management are crucial in order to ensure the fair and non-discriminatory treatment of consumers.

In some cases, human supervision may be essential to guarantee responsible operation, compensate for any technical deficiencies, and close any data gaps. Furthermore, in their choice of AI model, companies can make a considerable contribution to promoting transparency. Where results are comparable, simple models (e.g. in the simplest case logistic regression, which is commonly used today) are to be favoured over more complex black box methods. Such methods are not only easier to understand and interpret, they also allow bias to be better identified.

If supervised companies’ use of AI and ML methods results in illegal discrimination, BaFin will take decisive action and implement suitable measures, for example in the context of supervising violations of statutory provisions.

The AI Act provides a sound regulatory framework for this. It lays down EU-wide legal requirements for companies regarding explainability, transparency and fairness. And there is a chance that it will also set EU-wide standards – for an effective supervisory approach to unjustified discrimination by AI/ML in the financial industry.

First, please LoginComment After ~